Be aware, this blog post contains my notes on some investigation work I recently undertook with MassTransit, the open source service bus for .Net. The code contained herein was just sufficient to answer some basic questions around whether a MassTransit-based endpoint could be hosted in a .Net Core 3.1 Worker Service running on Linux.

NB: This is all “hello world” style code so don’t look here for the best way to do things.

My objectives were:

- To configure MassTransit to run in a .Net Core Worker Service.

- To test a MassTransit-based Worker Service running in a Linux container using Docker.

- To test a MassTransit-based Worker Service running on a Linux host as a systemd daemon.

- To use AWS SQS as the transport for MassTransit.

MassTransit in a Worker Service

This step turned out to straightforward. Again, to reiterate my approach almost certainly isn’t best practice but served only to demonstrate the feasibility of the approach.

I created a Worker Service using Visual Studio and adapted the template project to suit. First up I installed a few NuGet packages to enable MassTransit using AWS SQS transport, and support for dependency injection. I also added support to deploy the Worker Service as a systemd daemon.

I was then able to configure the MassTransit bus using dependency injection. However, I chose not to start the bus at this point but rather use the Worker Service lifetime events to do that (described later).

public class Program

{

public static void Main(string[] args)

{

var host = CreateHostBuilder(args).Build();

var logger = host.Services.GetRequiredService<ILogger<Program>>();

logger.LogInformation("Responder2 running.");

host.Run();

}

private static IHostBuilder CreateHostBuilder(string[] args) =>

Host.CreateDefaultBuilder(args)

.UseSystemd()

.ConfigureServices((hostContext, services) =>

{

services.AddHostedService<Worker>();

services.AddMassTransit(serviceCollectionConfigurator =>

{

serviceCollectionConfigurator.AddConsumer<Request2Consumer>();

serviceCollectionConfigurator.AddBus(serviceProvider =>

Bus.Factory.CreateUsingAmazonSqs(busFactoryConfigurator =>

{

busFactoryConfigurator.Host("eu-west-2", h =>

{

h.AccessKey("xxx");

h.SecretKey("xxx");

});

busFactoryConfigurator.ReceiveEndpoint("responder2_request2_endpoint",

endpointConfigurator =>

{

endpointConfigurator.Consumer<Request2Consumer>(serviceProvider);

});

}));

});

});

}

Note that I setup a simple message consumer which would respond using request response, just for testing purposes.

public class Request2Consumer : IConsumer<IRequest2>

{

private readonly ILogger<Request2Consumer> _logger;

public Request2Consumer(ILogger<Request2Consumer> logger)

{

_logger = logger;

}

public async Task Consume(ConsumeContext<IRequest2> context)

{

_logger.LogInformation("Request received.");

await context.RespondAsync<IResponse2>(new { Text = "Here's your response 2." });

}

}

For the purposes of this example I chose to use the Worker Service hosted service lifetime events to start and stop the MassTransit bus. Rather than inherit from the BackgroundService base class – which provides an ExecuteAsync(CancellationToken) method which I didn’t need – I inherited directly from the IHostedService and used the StartAsync(CancellationToken) and StopAsync(CancellationToken) methods to start and stop the bus respectively.

public class Worker : IHostedService

{

private readonly ILogger<Worker> _logger;

private IBusControl _bus;

public Worker(ILogger<Worker> logger, IBusControl bus)

{

_logger = logger;

_bus = bus;

}

public Task StartAsync(CancellationToken cancellationToken)

{

_logger.LogInformation("Starting the bus...");

return _bus.StartAsync(cancellationToken);

}

public Task StopAsync(CancellationToken cancellationToken)

{

_logger.LogInformation("Stopping the bus...");

return _bus.StopAsync(cancellationToken);

}

}

I used a separate console application (not shown here) to send messages to the Worker Service which I initially ran as a console application on Windows. That worked pretty much immediately proving that the .UseSystemd() call would noop when running in this mode, as expected. Great for development on Windows.

Worker Service in Docker

Running the Worker Service in Docker was also quite straightforward. I chose to use the sdk:3.1-bionic Docker image which uses Ubuntu 18.04. This choice of Linux flavour was arbitrary.

I had intended to try and get systemd running in the Docker container but I quickly realised that such an approach would run contrary to how you should use Docker. With Docker, you really want to containerise your application. Services such as systemd aren’t available to you. So, I simple invoked the Worker Service DLL with dotnet as an entry point.

# Build with SDK image

FROM mcr.microsoft.com/dotnet/core/sdk:3.1-bionic AS build

WORKDIR /app

COPY . ./

RUN dotnet restore \

& dotnet publish ./MassTransit.WorkerService.Test.Responder/MassTransit.WorkerService.Test.Responder.csproj -c Release -o out

# Run with runtime image

FROM mcr.microsoft.com/dotnet/core/runtime:3.1-bionic

WORKDIR /app

COPY --from=build /app/out .

ENTRYPOINT ["dotnet", "MassTransit.WorkerService.Test.Responder.dll"]

This worked as expected with the Worker Service responding to message sent to it by my test console application. In effect, the Worker Service was running as if it was a console application.

So, build the Docker image…

Run the container…

All looks good so use a test console application to send a message and see what happens…

Worked like a charm.

Worker Service as a systemd daemon

It’s worth saying up front that I found the following blog post of considerable use:

To test this out I could have spun up a Linux machine in AWS (other cloud platform providers are available) but I chose to experiment with a Raspberry Pi. Note that the code for the Worker Service was unchanged from when it was run in Docker (see above).

To run the Worker service as a systemd daemon on Linux you first need to build the application with the appropriate Target Runtime. See the following documents for more information on that:

However, being a bit lazy I chose to publish direct from Visual Studio and to copy the files to the Pi manually later. For this I selected the linux-arm runtime. Note also that to avoid installing the dotnet runtime on the devoice I chose the Deployment Mode of Self-contained.

The effect of selecting linux-arm as the Target Runtime is that there’s an extension-less binary that’s executable on Linux included in the published folder. This file is important later on when we configure systemd.

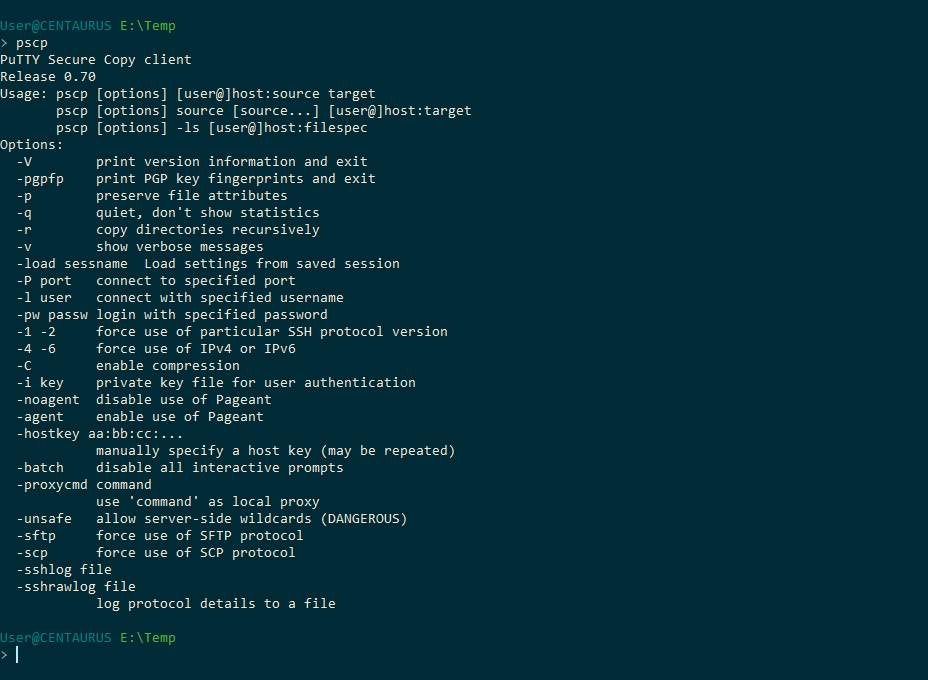

The next step is to copy the files to the target device.I copied mine to a directory called /app/linux-arm. Note that you also need to set executable permissions so systemd can run the application.

The next steps follow this post: https://devblogs.microsoft.com/dotnet/net-core-and-systemd/

My .service file looked like this:

Note that ExecStart must point at the extension-less executable created when you built the application with a Linux target runtime. As you can see, the daemon was now up-and-running.

Let’s fire a test message at it and see what happens. To monitor the log output I ran the following command: journalctl -xef.

Job done!

Conclusion

All objectives were met. I was able to run MassTransit in a Worker Service and to host that worker service in a Linux Docker container and as a systemd daemon on a Linux host.

If I were to add .UseWindowsService() to the Worker Service it would also be possible to host the service as a Windows Service.