Problem

I have been writing an AWS lambda service based on the Serverless Framework. The question is, how do I secure the lambda using AWS Cognito?

Note that this post deals with Serverless Framework configuration and not how you setup Cogito user pools and clients etc. It is also assumed that you understand the basics of the Serverless Framework.

Solution

Basic authorizer configuration

Securing a lambda function with Cognito can be very simple. All you need to do is add some additional configuration – an authorizer - to your function in the serverless.yml file. Here’s an example:

functionName:

handler: My.Assembly::My.Namespace.MyClass::MyMethod

events:

- http:

path: mypath/{id}

method: get

cors:

origin: '*'

headers:

- Authorization

authorizer:

name: name-of-authorizer

arn: arn:aws:cognito-idp:eu-west-1:000000000000:userpool/eu-west-1_000000000

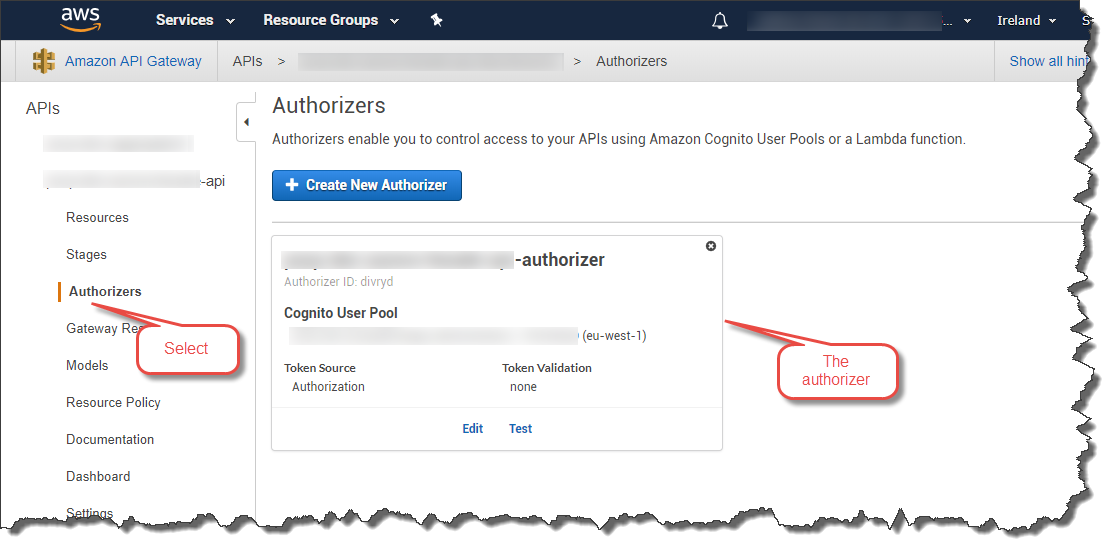

Give the authorizer a name (this will be the name of the authorizer that’s created in the API gateway). Also provide the ARN of the user pool containing the user accounts to be used for authentication. You can get the ARN from the AWS Cognito console.

After you have deployed your service using the Serverless Framework (sls deploy) an authorizer with the name you have given it will be created. You can find it in the AWS console.

An alternative is to use a shared authorizer.

Configuring a shared authorizer

It is possible to configure a single authorizer with the Serverless Framework and share it across all the functions in your API. Here’s an example:

functionName:

handler: My.Assembly::My.Namespace.MyClass::MyMethod

events:

- http:

path: mypath/{id}

method: get

cors:

origin: '*'

headers:

- Authorization

authorizer:

type: COGNITO_USER_POOLS

authorizerId:

Ref: ApiGatewayAuthorizer

resources:

Resources:

ApiGatewayAuthorizer:

Type: AWS::ApiGateway::Authorizer

Properties:

AuthorizerResultTtlInSeconds: 300

IdentitySource: method.request.header.Authorization

Name: name-of-authorizer

RestApiId:

Ref: "ApiGatewayRestApi"

Type: COGNITO_USER_POOLS

ProviderARNs:

- arn: arn:aws:cognito-idp:eu-west-1:000000000000:userpool/eu-west-1_000000000

As you can see we have created an authorizer as a resource and referenced it from the lambda function. So, you can now refer to the same authorizer (called ApiGatewayAuthorizer in this case) from each of your lambda functions. Only one authorizer will be created in the API Gateway.

Note that the shared authorizer specifies an IdentitySource. In this case it’s an Authorization header in the HTTP request.

Accessing an API using an Authorization header

Once you have secured you API using Cognito you will need to pass an Identity Token as part of your HTTP request. If you are calling your API from a JavaScript-based application you could use Amplify which has support for Cognito.

For testing using an HTTP client such as Postman you’ll need to get an Identity Token from Cognito. You can do this using the AWS CLI. Here’s as example:

aws cognito-idp admin-initiate-auth --user-pool-id eu-west-1_000000000 --client-id 00000000000000000000000000 --auth-flow ADMIN_NO_SRP_AUTH --auth-parameters USERNAME=user_name_here,PASSWORD=password_here --region eu-west-1

Obviously you’ll need to change the various parameters to match your environment (user pool ID, client ID, user name etc.). This will return 3 tokens: IdToken, RefreshToken, and BearerToken.

Copy the IdToken and paste it in to the Authorization header of your HTTP request.

That’s it.

Accessing claims in your function handler

As a final note this is how you can access Cognito claims in your lambda function. I use .Net Core so the following example is in C#. The way to get the claims is to go via the incoming request object:

foreach (var claim in request.RequestContext.Authorizer.Claims)

{

Console.WriteLine("{0} : {1}", claim.Key, claim.Value);

}