I’ve started dipping my toes in the ESRI ArcGIS APIs and wanted to get something clear in my mind -

What is a relationship class?

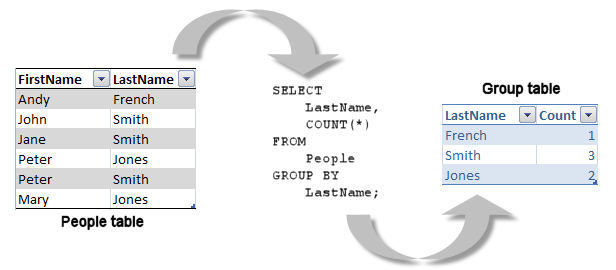

Firstly, I’ve made the somewhat obvious observation that the ESRI concepts around classes, tables, joins etc. are really a layer over the top of relational database concepts. It seems that the ArcGIS tools provide a non-SQL way to design or access the data in the geodatabase – sensible if you want a tool for users not familiar with databases or SQL. However, if you are a developer who

is familiar with SQL and databases the ArcGIS interface gets a bit confusing until you can draw a mapping between well understood relational database concepts and what the ArcGIS tools give you.

Tables

Tables in ArcGIS are just the same as tables in an RDBMS. They have

fields (

columns if you prefer) and

rows.

Note that in ArcGIS a table will always have an

ObjectID field. This is the unique identifier for a row and is maintained by ArcGIS. If the requirements of your application mean that you need to use your own identifiers then you will have to add a new field to the table (in addition to the

ObjectID) and use that to store your identifiers.

Subtypes

Tables can have

subtypes. A subtype is just an integer value that is used as a discriminator to group rows together (this is somewhat similar to the discriminator column when using a single table to store a class hierarchy in NHibernate). Note that it becomes a design decision as to whether you represent different feature types as separate feature classes or by using subtypes.

Domains

The term

domain pops up all over the place but the explanation for what they are is quite simple.

“Attribute domains are rules that describe the legal values for a field type, providing a method for enforcing data integrity.” ***

That looks very much like a way to maintain

constraints to me. There are 2 types of domain:

- Coded-value domains – where you specify a set of valid values for an attribute.

- Range domains – where you specify a range of valid values for an attribute.

Feature classes

First up,

a feature class is just a table. The way I think about it is that

feature class could have been named

feature table. The difference between a feature class and a plain table is that a feature class has a special field for holding shape data – the

feature type.

All features in a feature class have the same feature type.

Relationship classes

This is what started me off on this investigation.

I visualise a relationship class as just a link table in a database (i.e. the way you would normally manage many-to-many relationships in an RDBMS).

Here are some quotes from ESRI documentation:

“A relationship class is an object in a geodatabase that stores information about a relationship between two feature classes, between a feature class and a nonspatial table, or between two nonspatial tables. Both participants in a relationship class must be stored in the same geodatabase.” *

“In addition to selecting records in one table and seeing related records in the other, with a relationship class you can set rules and properties that control what happens when data in either table is edited, as well as ensure that only valid edits are made. You can set up a relationship class so that editing a record in one table automatically updates related records in the other table.” *

Relationship classes have a

cardinality which is used to specify the number of objects in the origin class that can relate to a number of objects in the destination class. A relationship class can have one of three cardinalities:

- One-to-one

- One-to-many

- Many-to-many

No surprises there; we are following a relational data model. Cardinality can be set using the relationship cardinality constants provided by the ESRI API. Note that once a relationship class is created,

the cardinality parameter cannot be altered. If you need to change the cardinality the relationship class must be deleted and recreated.

In a relationship one class will act as the

origin and another as the

destination. Different behaviour is associated with the origin and destination classes so it is important to define them correctly.

In code, relationships are expressed as instances of classes that implement the

IRelationshipClass interface.

Note that relationships can have additional attributes (this is like adding extra columns to a link table to store data specific to an instance of the relationship). See

The mythical ‘attributed relationship’ in ArcGIS for more details.

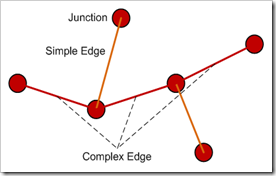

Simple relationships

In a simple relationship, related objects can exist independently of each other. The cascade behaviour is restricted; when deleting an origin object the key field value for the matching destination object is set to

null. Deleting a destination object has no effect on the origin object.

Composite relationships

In a composite relationship, the destination objects are dependent on the lifetime of the origin objects. Deletes are cascaded from an origin object to all of the related destination objects.

The

IRelationshipClass interface has an

IsComposite parameter; a Boolean. Setting it to

true indicates the relationship will be composite and setting it to

false indicates that the relationship will be simple.

Relates

A

relate is distinct from a

relationship and is a mechanism for defining a relationship between two datasets based on a

key. In other words

this very much like an RDBMS foreign-key relationship (where one table has a foreign-key column that references the primary key of another table). So, a relate can be used to represent one-to-one and one-to-many relationships.

Joins

Although regarded as a separate construct in ArcGIS the join is really just what you’d expect a join to be in an RDBMS:

“Joining datasets in ArcGIS will append attributes from one table onto the other based on a field common to both. When an attribute join is performed the data is dynamically joined together, meaning nothing is actually written to disk.” ***

References

*

Relates vs. Relationship Classes**

How to create relationship classes in the geodatabase***

Modeling Our World, Michael Zeiler, ISBN 978-1-58948-278-4

![image_thumb[3] image_thumb[3]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhxJMqr6cWAwJ2H7a5WyxW0qXyGcFb5d8eY6QKURrkDWFZKE_OIB_fH0Y9Cex-IkS4VbL3bJ_q8XQUt3ZRe9zF6XvrNUXuzHtM_wqnDWaAC0a9JbLtmkfEYLjGldCu8ssRbLYF6bqECi3g/?imgmax=800)